AI and the great silence

Predictive tools colloquially called “AI” have been the main watercooler topic for fiction writers for a while now. I have friends I love and respect who have found interesting ways to incorporate these tools into their creative processes, but most writers I know (including some of those same writers) are very concerned about the ethics of how some of the tools are trained, and the possible labour effects.

But for me, it wasn’t writing but teaching that really caused a switch to flip in my own brain, when I recognized the magnitude of the potential threat. This is an incredibly fast moving field and the consequences are going to be upon us before many people are even aware of the causes.

I’m a contract instructor at a university, where I teach one course every year. This year, our union went on strike over a number of issues, including the university’s demand to an in-perpetuity licence on the course material we create (video lectures, quizzes, assignments, reading lists, etc). Now, owning the intellectual property to my course material is not monetarily valuable to me in itself; even if I were to leave and teach the same course elsewhere, for example, I always revise my courses to suit the context and to improve them year over year. But what DID worry me is the question of WHY the university wanted this in the first place. The only possible reason would be to allow it to teach our courses without us.

Any teacher who has stepped in for another knows that using someone else’s material is awkward and usually creates more work than otherwise. And any teacher knows that good course material changes over the years. So I cannot imagine a human instructor finding much value in inheriting my videos or reading quizzes. The only reason I can possibly imagine the university wanting an “in perpetuity” licence to my work is to allow it to carry on teaching my courses without any human instructor at all — even after I’m long dead and the material itself is out of date. This sort of thing has, in fact, already happened at a Canadian university.

In a flash, I had a vision of the future: Courses taught by chatbot (we already have AI therapists and service desks, so why not?) with videos provided by humans who may have once lived. (Should the humans prove refractory, as our union did, the AI can make the videos too.) AI tools would grade papers, which would themselves, of course, be written by AI, based on textbooks that were also written by AI. It seems ridiculous, but what part of this system does not already exist in some creeping degree? A university could easily become, even if unofficially, a network of AIs talking to each other (or more likely, a single AI tool talking to itself) while the humans have absented themselves entirely from the culture they created. We would exist in an ongoing mausoleum of our ancestors’ culture, knowing ourselves only through the plausible sounding answers spat out by whatever succeeds ChatGPT.

This vision reminded me of another. Ray Bradbury’s 1951 story “The Pedestrian” envisions a city in the year 2053, in which no one is on the street because everyone is in their house being entertained by predictably comforting content. A single man walks alone in this city, past “The tombs, ill-lit by television light, where the people sat like the dead, the gray or multicolored lights touching their faces, but never really touching them.” He never meets another human, but is accosted by a police car that talks to itself.

Bradbury’s vision is the antithesis of E.M. Forster’s exhortation to “only connect.” In his novel, Howard’s End, the phrase is not only a reference to connections between human beings, but also “between the prose and the passion”, a connection without which we are “meaningless fragments.”

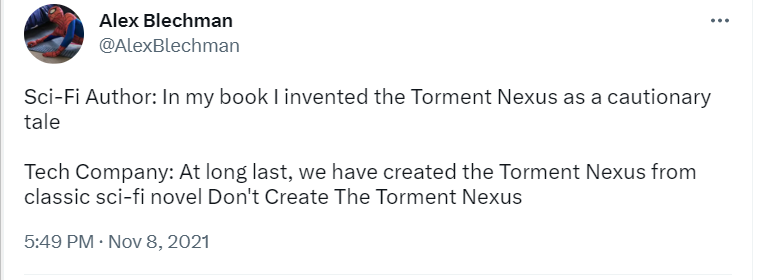

Among the many things AI will do is create film and television. (See the current WGA strike, which is also partly over the question of whether AI can be used to replace human writers.) We’ll all be entertained by algorithmically produced videos and fed new bestsellers every year by an algorithm trained on a corpus from the past, producing nothing new unless it happens to do it by accident, like the monkeys and Shakespeare.

But hang on, you say. Surely no AI tool could compete on sheer quality with a great writer, artist or filmmaker. So aren’t our jobs safe? The problem with that argument is that capitalism does not care about quality — a point made elegantly by Ted Chiang, one of the world’s best science fiction writers, in a recent essay on AI in an economic context. And capitalism is what produces books and movies. Yes, AI will not stop anyone from producing art for art’s sake. But getting paid to make art is not just a nice perk; it is what allows people to keep on making art when they have children to feed and elders whose long-term care bills are in the thousands every month. And student loans to pay, back from when they got their degrees for feeding AI papers into the maw of an AI professor. I guarantee tuition costs will not go down.

So we will keep working, just not at any of the things that cause human knowledge and culture to advance, because we will have outsourced that to the machines.

And art feeds on art, so even if human-produced art still exists, any reduction or replacement is a loss. The less humanity there is in the art that is produced and sold, the less chance for that connection between a book produced by a unique individual, and a reader, who will then produce a unique book. A hive mind, I think, cannot produce art. Already, there’s a strong temptation for writers to produce work that will not be too uncomfortable or surprising to readers, because we now live and die by algorithms and star ratings.

I’m keenly aware that for as long as there have been humans, humans have been predicting that some newfangled technology or social change would create a world of hollow men. Bradbury’s story takes its aesthetic from good old fashioned TV in the 1950s. I am old enough to remember when people worried that nobody would know anything in a world in which Google exists.

I don’t know. Maybe everything will be fine. But I find it increasingly difficult not to suspect that everything will get cheaper, shabbier, more homogenous, more palatable to the powerful. Just because we have seen it coming for a while does mean it won’t come.

We have never been here before, not precisely here. This is not only a new technology advancing with alarming speed; it’s new technology in the context of an extremely fragile social, economic and political order, a world of increasing inequality, of climate shocks, of tyranny. A world in which supply chains have broken down. A world in which the fundamental quality of life indicators for many, even in rich nations such as the United States, is on the decline. In other words, it’s not a world in which new technology is likely to lift all boats.

One of the books that made me a writer, God Emperor of Dune, includes a reflection on a “Butlerian Jihad” which, in the future envisioned by Frank Herbert, takes place thousands of years from now. “‘The target of the Jihad was a machine-attitude as much as the machines,’ Leto said. ‘Humans had set those machines to usurp our sense of beauty, our necessary selfdom out of which we make living judgments. Naturally, the machines were destroyed.'”

Destroying the machines is (probably?) not on the table, although with each passing year I gain more respect for the frame-breakers of the early 19th century. The technology is, of course, not where the fault lies; the problem is in the economic system that is so vulnerable to its misuse. Because the people who hold the money will absolutely misuse this; they already are.

Writers, artists, editors and other creative people are already pushing back by trying to make use of AI tools taboo. One reason for this is practical: some magazines, such as Clarkesworld, have been overwhelmed by submissions from people who think that if they just throw enough generated content at the wall, they’re bound to get a story accepted. People who think there’s enough money in fiction to make this worthwhile are in for disappointment, but a sucker’s born on the internet every minute, I guess. The deluge of AI submissions is tantamount to a denial of service attack on publications. I’m positive agents and book publishers will be next, if they’re not already. This will likely lead to more barriers against human writers trying to get their work read by someone who might be able to get it published.

Shame and taboo come with their own costs (we’ve already seen how the mere suspicion of using AI can cause a pile-on against possibly innocent artists.) And ultimately, I don’t think they’re enough to defend against the capitalist impulse to reduce overhead. I don’t know what the solution is. We push back how we can, and one of the most powerful ways to do that is labour action, as the screenwriters’ strike and my own university experience suggests (we did get the university to back down on the in perpetuity licence.) The problems with AI are not problems with the technology in isolation, and the solutions won’t be either.